Hey AI Product Manager!

So, you built an AI/ML (Artificial Intelligence/Machine Learning) model and demonstrated its performance to your business leaders, and now want to put your model to action and show the business value…

Now starts the daunting task of putting together a coherent roadmap to roll out the AI/ML. The roadmap needs to accommodate business process owners, IT application owners, OCM advisors, GRC leads (Governance, Risk and Compliance), Finance Controllers, and others you probably never met before. This is a challenging task many data scientists turned AI/ML product managers run into when putting their AI/ML into action.

In this article, I am summarizing my point of view based on my experience building such roadmaps.

Rediscover the Business Process

First step is to deepen your understanding of the business processes you are targeting for your AI/ML and rediscovering it. Let me explain why.

Business processes typically forms the core of any organization and in many cases gives the firm a competitive advantage. For example, Amazon’s competitive advantage in logistics, is founded on a set of well evolved and fine tuned business processes.

In almost all of the cases, the AI/ML you have developed will cater to such business process. Hence, it is important to understand the business process and see how AI/ML improves or optimizes it. So, work closely with the respective business process owners to understand the intent of the business process than its form.

But, wait, why rediscover it?

Well!, Optimizing business processes for scale and efficiency has been key to any organization’s health and performance. Traditionally, such optimization work has centered on human skills (and intelligence), coordination and tools.

AI/ML (Artificial Intelligence and Machine Learning) offers a radically different way of optimizing business processes as it approaches the human skills and coordination problem differently. A human centric process design accounts for task coordination and information flow, but an AI centric process design doesn’t need to.

As a result, when applying AI/ML to business processes, it is important to rediscover and analyze the decision making complexity and information flows assumed and embedded within the existing business process.

To simplify this analysis, I propose four business processes archetypes in an increasing order of decision making complexity and information flows:

- Static Archetype – These processes generally have workflows that are static, relatively straight forward, and simple to observe or experience. For instance, on-boarding a new employee can fall under this category. These processes are measured for speed (read cost/time) and consistency and are governed for resourcing and performance.

- Dynamic Archetype– The workflows within these processes are scenario heavy and use extensive logic and conditions to route the work. These are measured for compliance and risk and governed for exceptions and deviations. An example is a product recall process. You typically have several teams involved with strict guidelines defined.

- Intelligent Archetype– These processes have workflows that are loosely defined and judgment heavy. They are measured for the quality of the output and governed for impact and relevance. An example is the product design process. It is pretty creative and experiences heavy.

- Adaptive Archetype– These processes have workflows that are fluid, use multiple models to achieve a business outcome. They are measured by the business impact they make and are governed for ethics and the change they bring in. An example is acquiring a company. You use financial, strategy, organizational models; Adapt your approach frequently based on market conditions and negotiations, these are pretty fluid in nature.

Choose your AI/ML Deployment Modes

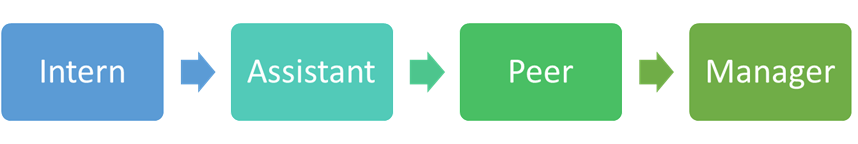

Once you analyze your business process, the next step is to explore various ways you can deploy AI/ML into the business process. I propose four modal framework to plan for your AI/ML deployment, borrowing ideas from my own experience and from literature:

In its most basic form, AI can be implemented as an Intern where it’s performance and behavior can be benchmarked, analyzed and understood. It is the time to stress test and fine tune sensitivity for the real world action.

Once AI is readied for action, it is advisable to introduce it as an Assistant, where it can provide support, influence, or even a nudge to a human for faster or more efficient decision making.

Overtime, confidence and trust will be built in the AI, when you can carve out an ‘area of responsibility’ for the AI, making it effectively the owner of the process area. This ‘Peer’ mode, is where you can realize some hard and tangible value from your AI efforts.

Finally, in some cases, AI can be entrusted with some managerial tasks too, such as distributing, coordinating and evaluating the work across the business processes.

Please note that, it is not always necessary to follow these steps sequentially. Some steps could be skipped or optional depending on several factors and the process under considerations.

Building your Roadmap

Business processes may have varying degrees of sensitivities towards automation or change, especially those areas that involve decision making . AI Product Leads should work with respective process owners to evaluate several factors before developing the deployment roadmap.

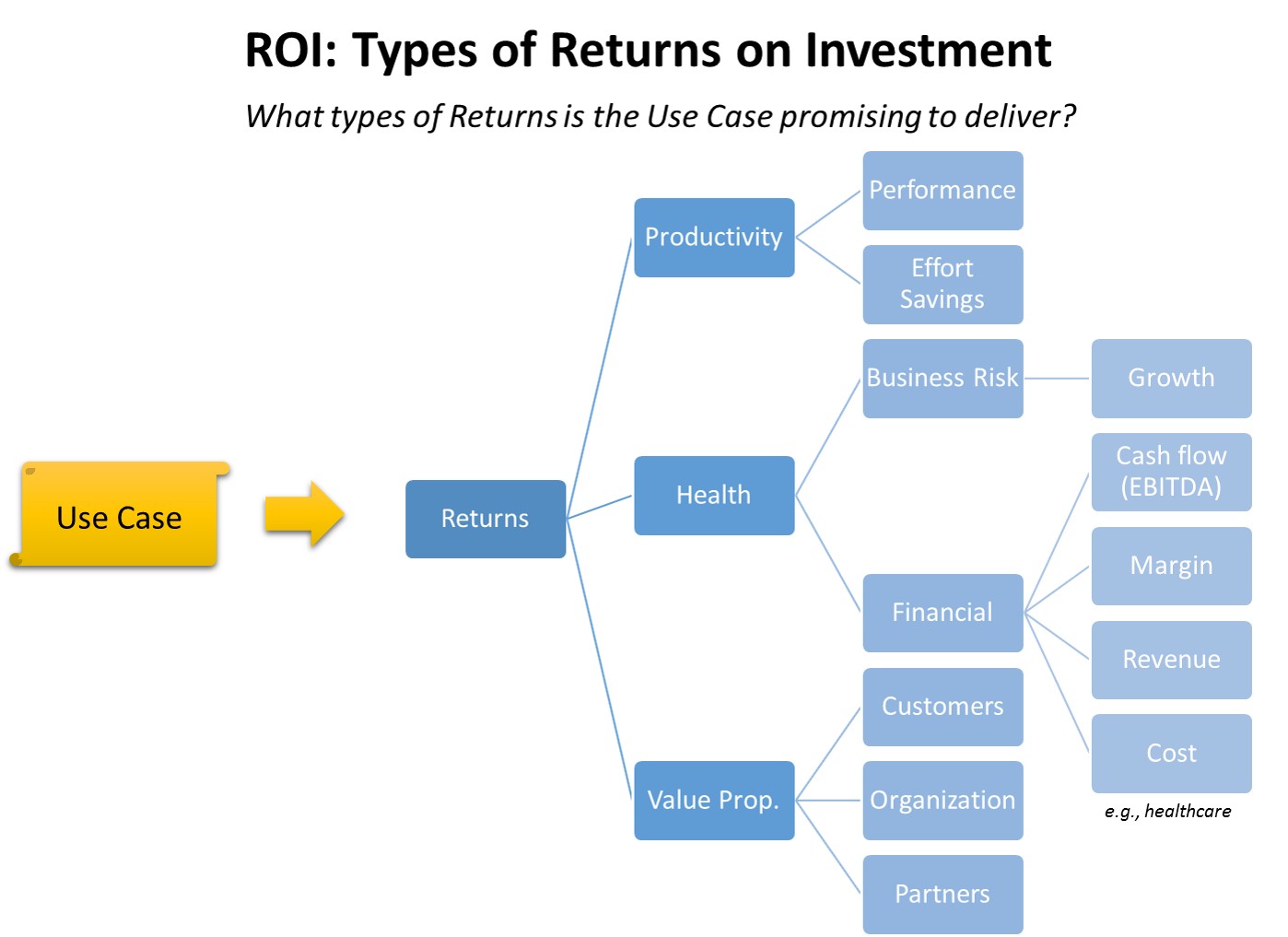

I will describe four of these factors that I think are important. Each of these four factors has top two sub-factors:

- Disruption: How much disruption are we introducing.

- Upskilling or Reskilling – Does the AI drive reskilling or upskilling (people) across the process chain

- Automation – Does the AI make any of the existing roles redundant

- Risk: What is the incremental risk we are introducing.

- Risk and Liability – Are there any risks or liabilities arising out of AI doing the step? Did you assess them?

- Accountability – Who will you hold accountable for performance gaps or missteps tracing back to AI? Do you need AI to explain rationale behind the decisions it makes?

- Design: How to account for co-existence and feedback

- Learning – Did you architect a learning loop into your AI? How do you plan to make AI relevant to its intended job function on an ongoing basis?

- Integration – Did you figure out all the possible integration points when deploying AI at a process step? For instance, some steps may need RPA (Robotic process automation), or digital upgrades or integration with legacy systems.

- Change: How to future-proof AI/ML against change

- Adaptability– How do you plan to ensure AI can still do the job and adapt even if the workflow mutates?

- New Skills – How do you update AI with new skills as they are needed, without hampering existing performance?

The above considerations should provide a qualitative frame of reference for developing your AI/ML roadmap/roll out plan, and can also help as a phase gate checklist.

I would like to end this article with a note on the current state of OCM (Organizational Change Management). OCM framewors such as ADKAR make a few fundamental (human-centric) assumptions about the nature of changes that can occur in a business process. With the explosion of AI/ML and the nature of what qualifies as intelligence, it is time for us to build OCM 2.0.

What do you think about this article? Would love to learn from your experiences in putting AI/ML to work in your domain. Please do share.